Website URL Scraping Service

We can extract all the links from a web source and provide you with ready data.

What is website URL scraping used for?

Web crawling of links from websites is used for the following purposes:

Content scraping

Before website content is scraped, all URLs need to be extracted. It also helps to make sure that no piece of content is missed.

SEO tasks

Crawling of URLs can be used to analyze the cross-links and keywords of a particular website. There are a lot of ready-to-use tools for such tasks.

Market research

Even without scraping all of the content from a website, you can understand a lot about your competitors or market players by scraping URLs. For example, scraped and parsed website URLs can help you understand the number of goods, their names, and other parameters. It could be very helpful for quick market research or competitor analysis.

Tool, Service, or Custom Solution?

There are ready-to-use tools on the market that allow you to crawl and scrape URLs by yourself. Depending on your purposes, you can choose between using a tool or ordering the service or even a custom solution from DivaBizTech.

Using ready-to-use software is reasonable when you have quite a small and simple task. For example, if you need to scrape all links from your small website to analyze invalid links, this tool might work best for you.

If you want to process your URLs after link scraping is complete, get a consultation from our web scraping expert. Once we understand your purpose, we can advise you on the best option.

Project Example

One of our clients has a business engaged in selling bicycles. He had an old website that has been working for years. Then he decided to create a new website. So to avoid missing a single item from the old site, he ordered DataOx’s URL scraping service.

What We Did

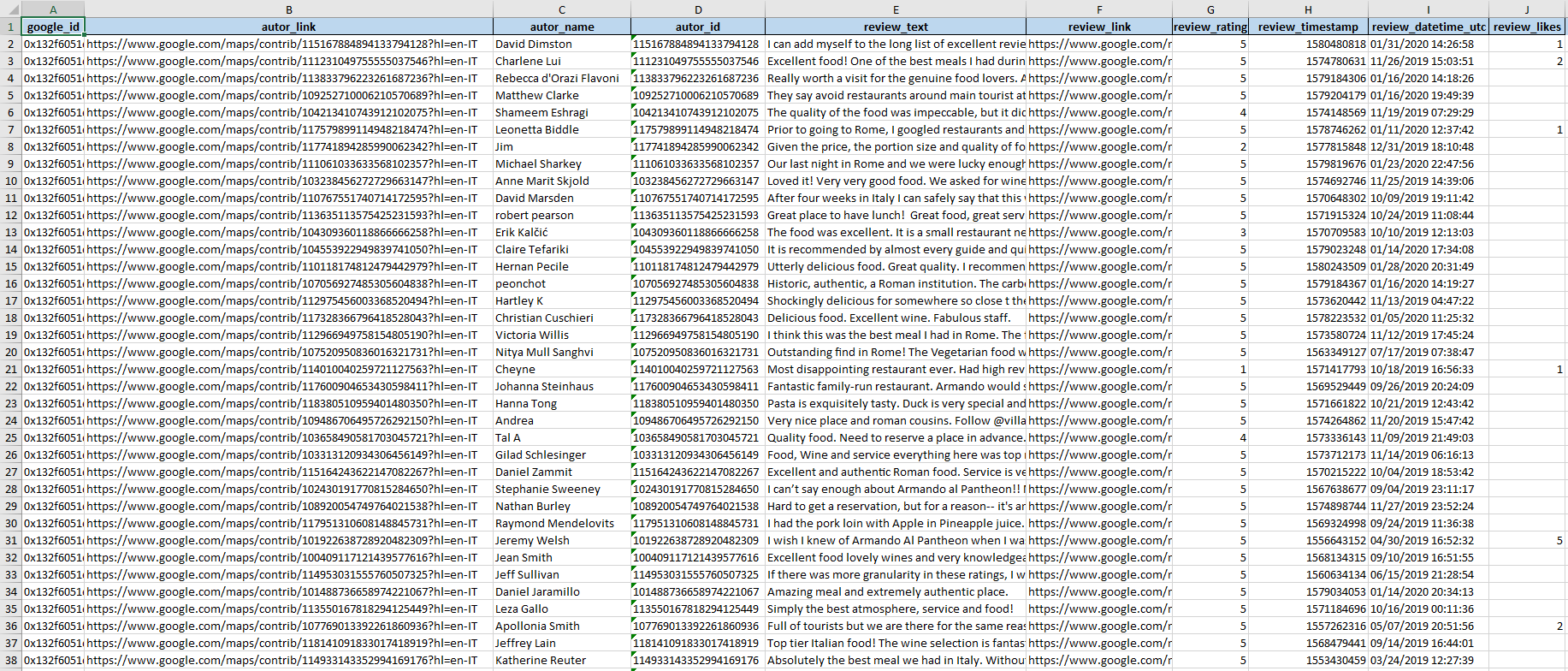

We scraped all the URLs and content from each page on the old bicycle selling website, structured the data into a database, and provided it to our client.

The most important requirement was to not miss any piece of content. So after extracting the data from the old website, our quality assurance department checked all items and compared them to the original web source. The original website contained more than one thousand web pages, so thorough data checking helped us finish the project successfully.